AI in Security Operations Centers (SOC)s - Microsoft Defender

- brencronin

- Nov 4, 2025

- 5 min read

Updated: Feb 11

Security CoPilot - Reference Diagram Overview

The component labeled “Security Copilot” functions primarily as an orchestrator that bridges the Microsoft environment with the OpenAI LLM operating within Microsoft’s secure ecosystem. In Microsoft Security Copilot, Copilots function primarily as orchestrators rather than traditional AI models using embeddings or vector databases. Instead of performing semantic searches or querying internal data stores, Copilots leverage plugins to call the APIs of their respective Microsoft or third-party services.

Unlike traditional Retrieval-Augmented Generation (RAG) architectures that rely on vector database embeddings, Security Copilot accesses environmental data through native security tool APIs exposed via the Microsoft Graph API. Data retrieval, enrichment, and contextual grounding are performed through plugins, which serve as controlled connectors between the LLM and the environment. The orchestrator uses these plugins to process the LLM’s response and align it with real-time environmental data, ensuring outputs are relevant and actionable. This design eliminates the need for Copilots to handle direct database provisioning or data security controls, as those responsibilities remain within the connected service. Supporting them are agents, which act as specialized workers that execute specific operational or analytical tasks as directed by the Copilot.

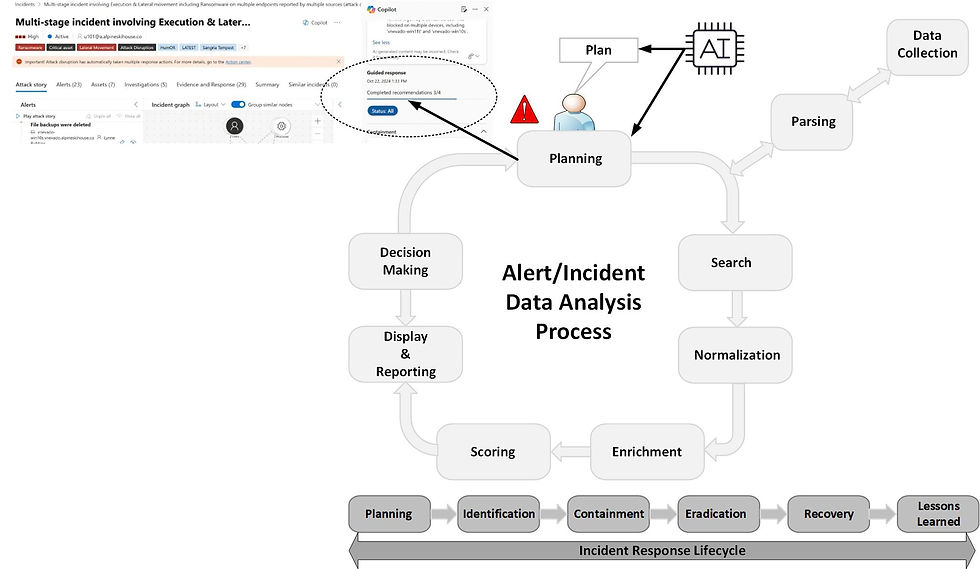

Security Copilot Defender XDR Plugin – Data Analysis Phase Walkthrough

When evaluating the Defender XDR plugin for incident response workflows, it’s essential to assess its capabilities within the context of the data analysis phases. Implementation should be planned around how effectively each phase, Planning, Data Search, Normalization, Enrichment, Scoring, Display, Reporting, and Decision Making, is supported.

Microsoft has long incorporated guided response capabilities into its security tools, and many of these responses remain relatively static. A common example is a recommendation such as “check with the user or system to verify if this behavior is normal.” However, there have been some rapid improvements in Security Copilots "Guided Response” feature which is pushing for more detailed step-by-step investigation and remediation guidance based on incident details. This aligns directly with the Planning phase.

Transitioning from Planning to Data Search (including Data Collection and Parsing) has traditionally been manual, although most data collection and parsing occur beforehand through log onboarding agents. Within Defender XDR, Security Copilot’s integration with Microsoft telemetry simplifies this stage.

For example, “Summarize device information” (from Microsoft Defender for Endpoint) gives high-level visibility into device posture and suspicious activity, while “Summarize user/identity context” (from Microsoft Defender for Identity and Entra) provides insight into anomalies linked to specific identities.

However, two key weaknesses exist in the areas of planning and data search:

Limited visibility into non-Microsoft telemetry, such as third-party firewalls or NDR logs. While this data can be ingested into Microsoft Sentinel for KQL querying, Security Copilot lacks schema awareness of non-Microsoft data sources, preventing it from dynamically constructing precise queries.

Non-determinism in AI-generated plans, which can lead to inconsistent recommendations for additional data searches.

The Normalization phase in Defender XDR is partially optimized through mapping telemetry values to “entities” such as IP addresses, usernames, or file names. Security Copilot expands on this by analyzing more complex artifacts, scripts, registry keys, or code, to generate human-readable explanations. This mirrors having an experienced system administrator interpreting data in real time, a task analyst have been performing for years via search engines, and more recently via LLM tools.

Next is the Enrichment phase. Historically, enrichment has been the core use case for Security Orchestration, Automation, and Response (SOAR) systems. In this process, the SOAR tool takes an entity from an alert, such as an IP address, file hash, or domain, and queries an external source like a Cyber Threat Intelligence (CTI) platform, returning the results to the analyst for further context.

Over time, EDR and SIEM platforms have absorbed much of this functionality natively, reducing reliance on external SOAR tools, though these still retain value in certain 3rd party enrichments and other complex or multi-vendor environments.

Within AI-driven platforms like Security Copilot, enrichment continues to operate through integrations and plugins, for example, third-party connectors to services such as AbuseIPDB. However, this process remains an orchestration function, not a purely AI-driven one, as it involves querying external systems for data retrieval.

The real value of AI emerges once the enriched data is returned. Security Copilot’s AI models can correlate, interpret, and integrate the new intelligence directly into other phases of analysis, most notably reporting, where contextualized insights enhance accuracy and reduce analyst workload.

The next phase, Scoring, has traditionally been a manual process, whether assigning an initial alert or incident severity (e.g., low, medium, high, or critical) or interpreting the significance of specific indicators observed during an investigation.

Early attempts at automating scoring, before the rise of AI, relied on static rule sets and manually assigned values integrated into logging systems. For example, a system might increase an alert’s priority if the suspicious event occurred on a host with a critical vulnerability or on a system tagged as mission-critical.

Over time, machine learning models began to augment this process. Instead of relying solely on static thresholds, these models assessed event relationships, frequency patterns, and historical analyst feedback (such as prior false-positive tagging) to adjust scores dynamically.

Building upon the relationship between a vulnerability and its associated incident, AI enhances the ability to correlate and integrate disparate data sources related to an alert by leveraging key attributes such as asset identifiers and user identities. This correlated data may include known vulnerabilities, exposure information, and threat intelligence indicators derived from cyber threat intelligence (CTI) sources.

Moreover, AI introduces significant value in this phase by enabling the interpretation of patterns and artifacts that were previously random or obscure. As highlighted in the enrichment stage, AI’s contextual understanding allows these elements to be analyzed with greater precision and meaning. When this enriched insight is fed back into the model, it enhances the accuracy and contextual relevance of scoring, thereby improving the overall quality of incident prioritization and analysis.

The next phase, Display & reporting, has traditionally been a largely manual process unless supported by built-in visualization components within detection tools such as EDR platforms. Examples include device timelines, which present system activity organized by categories like network, registry, and process events, and incident graphs, which visualize relationships between entities (e.g., showing that a specific IP address connected to a particular host). The generation of reports involves documenting key findings, response actions, and attribution in a structured format. This, too, has been a manual and time-consuming process where AI can add significant value by automating report generation and summarization.

Interestingly, the Security Copilot summary is among the first outputs generated by the system. This design choice is logical, as it provides analysts with a concise, human-readable overview of the alert or incident, enabling rapid contextual understanding at the outset of the investigation.

Enhancing this capability further, such as by making these AI-generated summaries accessible via APIs, would allow seamless integration with ticketing systems, dashboards, and case management tools, driving real-time reporting efficiency.

Finally, Decision Making, while not traditionally a core function of AI, is beginning to see relevant applications. AI-assisted analysis can provide recommendations or confidence-weighted insights that support human decision-making during incident response.

Ignite 2025: What's new in Microsoft Defender?

The Agentic SOC Era: How Sentinel MCP Enables Autonomous Security Reasoning

References

AI-Driven Guided Response for Security Operation Centers with Microsoft Copilot for Security

Summarize device information with Microsoft Copilot in Microsoft Defender

Microsoft Copilot for Security Design

Use case: Incident response and remediation

Threat Intelligence Briefing Agent

Comments